In the ever-evolving landscape of technology, efficiency and scalability have become key factors for success. Enter containerization and Kubernetes, two powerful tools revolutionizing how we deploy, manage, and scale applications. In this guide, we will explore the fundamentals of containerization and Kubernetes, shedding light on the benefits and best practices for utilizing these cutting-edge technologies. Join us on a journey through the world of containers and clusters, and discover how they can unleash the full potential of your applications.

Understanding Containerization: The Basics of Isolation and Efficiency

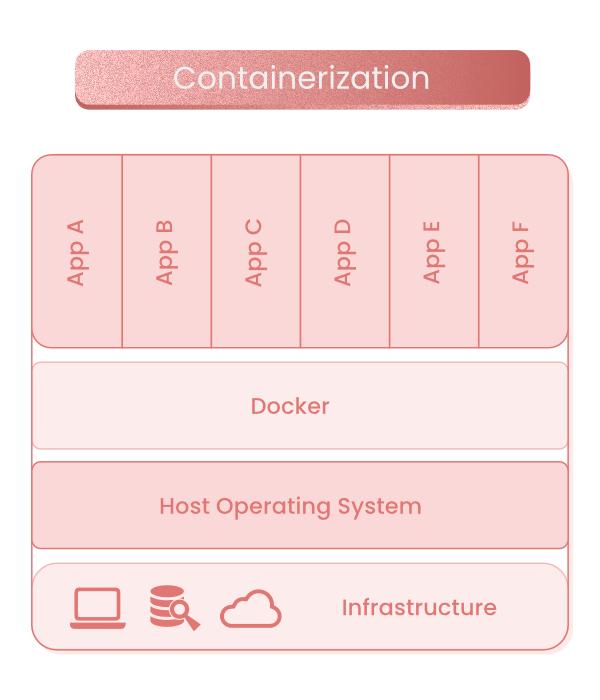

Containerization is a method of packaging, distributing, and running applications in an isolated environment known as a container. Containers encapsulate everything needed for a software application to run, including the code, runtime, system tools, libraries, and settings. This enables applications to run reliably in any computing environment, making them portable and scalable across different systems. With containerization, developers can build, test, and deploy applications faster without worrying about dependencies or compatibility issues.

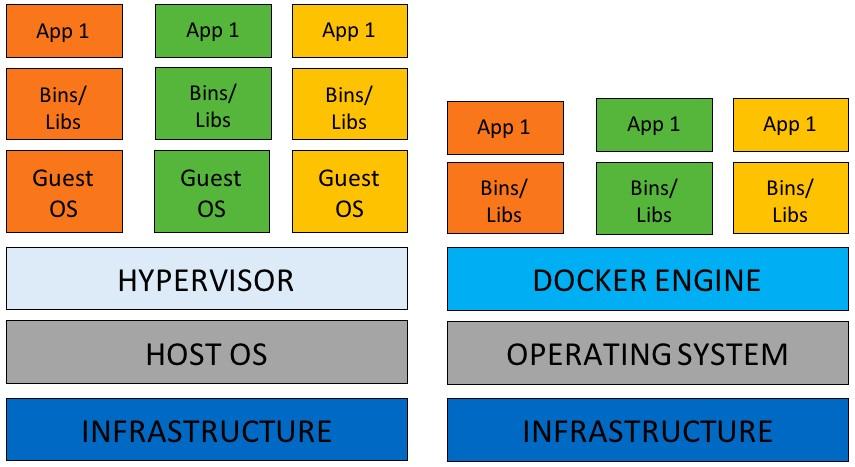

One of the key benefits of containerization is its efficiency in resource utilization. Containers share the host operating system kernel, which means they require fewer resources compared to virtual machines. This allows for greater density of applications on the same infrastructure, leading to improved cost efficiency and performance. Containers also provide isolation between applications, ensuring that each container runs independently without interfering with others. Popular container orchestration tools like Kubernetes help manage and automate the deployment, scaling, and monitoring of containerized applications in a clustered environment, further enhancing efficiency and scalability.

Deploying and Managing Containers: Utilizing Kubernetes for Orchestration

In the world of modern software development, containerization has become a crucial technology for deploying and managing applications efficiently. By encapsulating an application and its dependencies into a lightweight, portable container, developers can ensure consistency across different environments and streamline the deployment process. Utilizing containers offers benefits such as improved scalability, resource utilization, and isolation, making it a popular choice for DevOps teams.

When it comes to orchestrating containers at scale, Kubernetes emerges as a powerful tool for automating deployment, scaling, and management. With features like auto-scaling, load balancing, and self-healing capabilities, Kubernetes simplifies the task of managing containerized applications in a dynamic environment. By leveraging Kubernetes for orchestration, organizations can achieve high availability, fault tolerance, and efficient resource allocation, empowering them to focus on delivering value to their users.

Best Practices for Containerization and Kubernetes Deployment

When it comes to containerization and Kubernetes deployment, there are several best practices that can help ensure a smooth and efficient process. One key practice is to properly define the boundaries of your containers, ensuring that each container is responsible for a single concern. This not only helps with scalability and maintainability but also makes debugging and troubleshooting much easier.

Another important best practice is to use Kubernetes for orchestration. Kubernetes provides a robust platform for managing containerized applications, offering features such as automatic scaling, load balancing, and self-healing. By leveraging Kubernetes, you can streamline your deployment process and ensure high availability for your applications. Additionally, make sure to regularly monitor and optimize your containers and Kubernetes clusters to ensure optimal performance and resource utilization.

Advanced Strategies for Scaling and Monitoring Containerized Applications

Within the realm of containerization and Kubernetes, there are advanced strategies that can significantly enhance the scalability and monitoring of containerized applications. One key technique is horizontal scaling, which involves adding more containers to distribute the workload and handle increasing traffic efficiently. By using tools like Kubernetes Horizontal Pod Autoscaler (HPA), you can automatically adjust the number of pods based on defined metrics such as CPU utilization or custom metrics. This dynamic scaling approach ensures optimal performance and resource utilization without manual intervention.

Another crucial strategy is implementing robust monitoring solutions to gain insights into the health and performance of your containerized applications. Tools like Prometheus and Grafana can be integrated with Kubernetes to collect metrics, visualize data, and set up alerts for potential issues. Leveraging these monitoring tools allows you to proactively identify bottlenecks, optimize resource utilization, and ensure high availability of your applications. By utilizing advanced monitoring practices, you can maintain the stability and reliability of your containerized infrastructure, ultimately improving the overall user experience.

Final Thoughts

In conclusion, containerization and Kubernetes offer a powerful solution for managing and deploying applications in a complex and dynamic environment. By understanding the key concepts and principles behind these technologies, businesses can streamline their development processes and increase operational efficiency. Embracing containerization and Kubernetes is not just a trend, but a fundamental shift in the way we build and deploy applications. As the landscape of technology continues to evolve, staying ahead of the curve with containerization and Kubernetes will be essential for success in the digital age. So, dive in, explore, and unleash the full potential of containerization and Kubernetes in your organization.